Table of Contents (click to expand)

Over the decades, computers have reduced exponentially in both size and cost, such that now they are even affordable for personal computing. This is largely due to the miniaturization in transistor technology, super-efficient silicon-integrated circuits, and the effect of Moore’s Law.

Computers hold a vital place in modern society and have played a pivotal role in humanity’s development in recent decades. This tribe of hunter-gatherers has reached the moon and could become a multi-planetary species in the near future thanks to, in no small part, our ability to mechanize repetitive procedures while storing and processing data based on given instructions.

Take a glance around and you will find them everywhere. They power the smartphones in your pocket, the watch on your wrist, the pacemakers that run people’s faulty hearts, the engines of your cars, the elevators of your building, the traffic sign at the 4-way crossing, your grandma’s cochlear implants and even specialty toilet seats… clearly, computers have become ubiquitous.

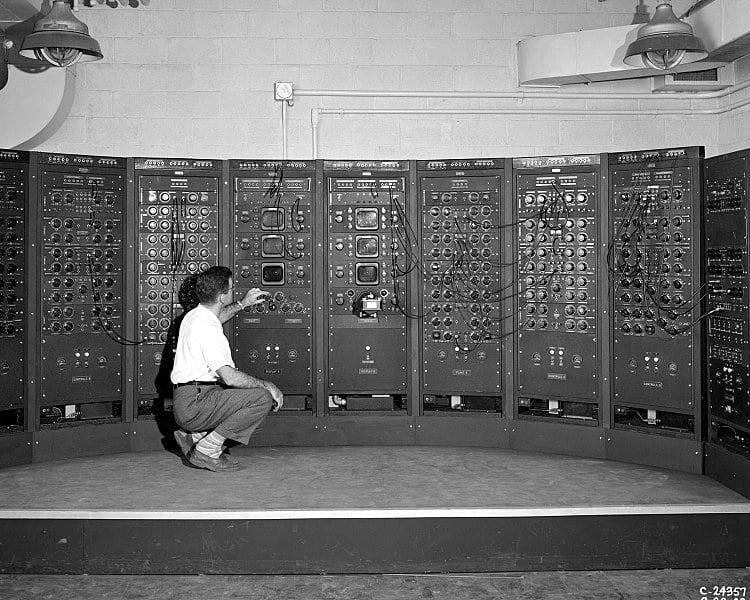

However, this wasn’t always the case. Early computers were huge—often the size of an entire room! However, these massive machines could barely reach the processing power of the device that now handily fits your pockets; it would take hours to produce the results that your smartphone produces in mere seconds. Considering that this amount of progress took only a handful of decades to achieve, it’s quite a feat indeed. Peeling back the curtains on this technological advancement will help us better understand the leap in computing that has come to define this century—and humanity as a whole.

Recommended Video for you:

Why Were Early Computers So Big?

At the most fundamental level, the purpose of a computer is to take the external input (provided by humans and in current cases, external environments and other programs) and process it to produce a meaningful output.

Computers have existed in rudimentary forms before electricity. These were one-to-one correspondence devices that used fingers to do arithmetic and simple calculations (e.g., the abacus). These devices were further iterated to do faster and more complex calculations.

Invention Of Punch Cards

A major leap was made by Joseph-Marie Jacquard in 1804 when he developed a system where punch cards were used as the input for looms. These punching cards could be replaced to feed in a new input, while allowing the main machine to remain as is. However, it was not until 1833, when Charles Babbage—considered “the father of the computer”—invented the first general-purpose computer. His computer saw the use of the revolutionary analytical engine that used punch cards as the input and a printer as an output.

After the widespread distribution of electricity, electromechanical relays were used as switches to interact with computers. For analog computers, a continuously changeable physical quantity was vital. For this purpose, the physical phenomena of electrical, mechanical and hydraulic quantities was used to model the problem that needed to be solved. These computers were extensively used in World War 1.

Dawn Of The Digital Computers

On the contrary, digital computers assigned different quantities through symbols, which was their major advantage, as the processes in digital computers could be reproduced more reliably.

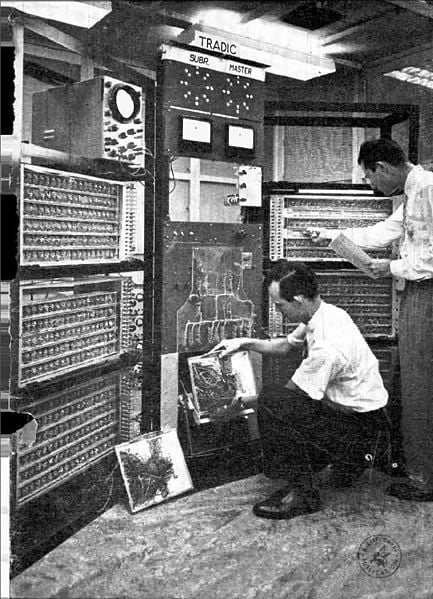

Discovery Of Transistors

These were the first-generation computers that relied on vacuum tube logic circuitry for programming. These were placed in rooms bigger than an average house and would break down very often. These saw their heyday in the 1950s, but were replaced by transistors; this helped in gaining mainstream interest, as they were much faster, smaller and more reliable. For comparison, one bit in a vacuum tube was close to the size of a thumb, while a bit in transistors was the size of a fingernail.

Age Of Integrated Circuits

The next leap was seen when transistors were replaced by integrated circuits in the 1960s. This represents the advent of the third generation of computing and came about through the use of tiny MOS transistors (metal oxide semiconductor field-effect transistor), also called MOSFET. The large transistor size shrunk down to make electronic circuits on a flat piece of material (silicon is the most widely used material for IC). This propelled the processing power and simultaneously reduced the size of the computer. An integrated circuit could fit thousands of bits into a space the size of a hand!

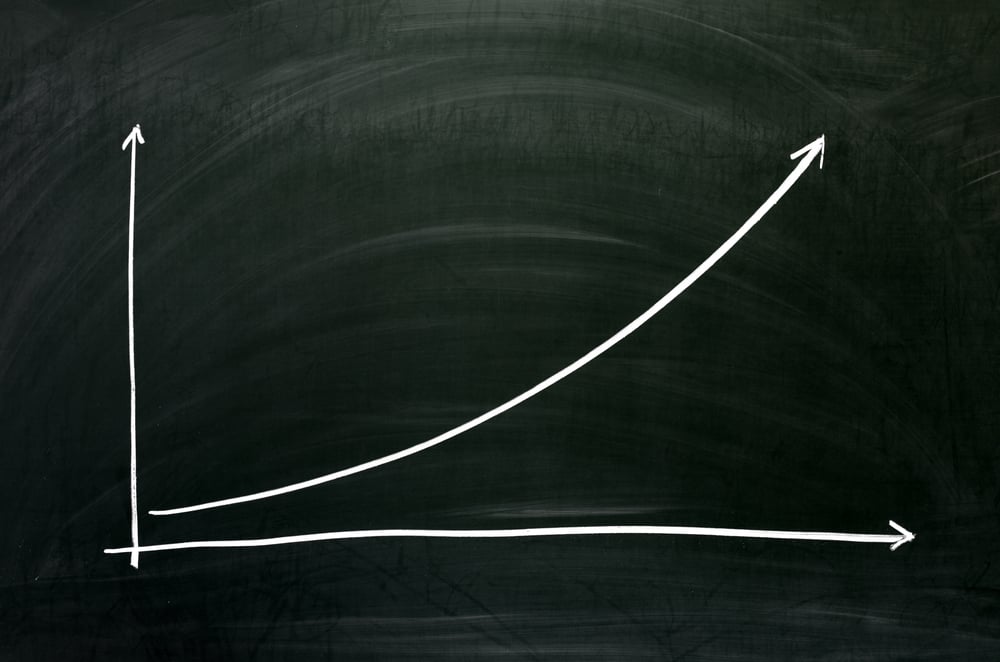

This development placed the available computing power on an exponential curve, resulting in the present that we live in today.

Moore’s Law And The Advent Of Personal Computers

The silicon computer chip became the gateway to unlocking the potential for exponential processing power. The size of transistors was becoming smaller and smaller, and with each iteration, more computing power could be packed into the same amount of space; the transistors used today are just 7 nanometers in length!

Gordon E. Moore, the co-founder of Intel, published a paper in Electronics Magazine on April 19, 1965, describing a law that has now become quite famous. He stated the incredible impact of the miniaturization of technology and estimated that it would take 18 months for computers to double in processing power. This would result in computers today that are millions of times faster than those used only half a century ago.

This also meant an exponential reduction of the cost of the same processing power every 18 months. Thus, a chip that would pack 2000 transistors in 1970 would cost $1000; the same would cost $500 in 1972, $250 in 1974 and about $0.97 in 1990. The cost of that chip today would be a mere $0.02, clearly showing the power of exponential growth. This is what allowed computers to become a household phenomenon, traveling from our desks to our bags to our pockets and fingertips!

Conclusion

The shrinking of the bulky vacuum tubes, followed by ever-shrinking transistors has significantly reduced the size of a computer. Combined with advancements in storage technology, a better power supply, and cooling methods, this rapid tradition of improvement has given us the powerful personal computers that we see today.

Moore’s Law has been surprisingly accurate in terms of predicting the size and computing power of modern computers in the last half-century, but it’s approaching a physical limit, as transistors ca no longer be made any smaller. That being said, the advancement could come from other ingenious ways of using better materials than silicon, or making 3d circuits. A major leap of computing could unlock unprecedented power once we crack the quantum computer mystery. In any case, it will be exciting to see how future advancements in computer hardware further change the way we live and communicate with each other. Indeed, it’s a good time to be alive!