Table of Contents (click to expand)

Deepfake is a new media technology wherein a person simply takes existing text, picture, video, or audio and then manipulates, i.e., ‘fakes’ it to look like someone else using advanced artificial intelligence (AI) and neural network (NN) technology

After its first appearance a few years back, deepfake technology has evolved from an innocuous tech geek’s chicanery to a malicious slandering weapon. In this article, we’ll see what exactly this dreaded deepfake tech is, how it works, what different forms it comes in, and how we can detect or bust a deepfake.

Recommended Video for you:

What Is Deepfake?

Deepfake is one of the buzzwords in media technology wherein a person simply takes existing text, picture, video, or audio and then manipulates, i.e., ‘fakes’ it to look like someone else using advanced artificial intelligence (AI) and neural network (NN) technology.

Want to put abusive words in the mouth of your nemesis? Or swap the movie protagonist with your favorite Hollywood superstar? Or do you just want to make yourself dance like Michael Jackson? Then deepfake is what you need!

Deepfake content is growing exponentially. Unfortunately, deepfake tech has already been repeatedly used to gain political mileage, to tarnish the image of a rival, or to commit financial fraud.

Let’s now look into the three main types of deepfakes and explore the data science that allows them to work. We’ll also focus on deepfake detection technologies that researchers and security consultants are working on to curb the malicious use of deepfakes.

Deepfake Text

In the early days of artificial intelligence (AI) and natural language processing (NLP), it was posited that it would be a challenge for a machine to do a creative activity like painting or writing. Fast-forward to 2021; with the powerful language models and libraries that have been built over the years by the incremental work of researchers and data science professionals, top-rated AI-generated prose can now write with humanlike pith and coherence.

GPT-2

Take, for example, GPT-2—the latest breed of the text-generation system released by research lab OpenAI from Silicon Valley. This tech has impressed both the layman and domain experts with its ability to churn out coherent text with minimal prompts.

OpenAI engineers used over 8 million textual documents that were scrapped (method of relevant data extraction from webpages) and combined with a billion parameters for modeling and training of GPT-2 AI.

The essence of deepfake and other such technologies like deeplearning, which leverage artificial technologies, lies in training the software to think and adapt itself using past data it is fed through data sets. You can read more about artificial intelligence in this article.

Using GPT-2, you can just punch in the headline and the deepfake text algorithm will generate a fictitious news story around that headline. Or simply supply it a first line of a poem and it will return the whole verse.

Many media houses are using deepfake algorithms coupled with web scrapping to generate stories or blogs that are written by the software themselves.

Researchers at the Middlebury Institute of International Studies’ Center on Terrorism, Extremism, and Counter-terrorism (CTEC) warns that tools like GPT-2 can be misused to propagate racial supremacy or disseminate radical messages by extremist organizations.

Deepfakes On Social Media

In tandem with writing stories or blogs, deepfake technology can also be leveraged to create a fake online profile that would be hard for a normal user to discern. For example, a Bloomberg (non-existing) journalist with the name Maisy Kinsley on social networking sites like LinkedIn and Twitter was plausibly a deepfake. Her profile picture appeared strange, perhaps computer generated. The profile was probably created for financial benefit, as the profile of Maisy Kinsley repeatedly tried to connect with short-sellers of Tesla stock on social media. Short-sellers are people who are bearish on the stock market and they short, i.e, sell the stock with the conviction that the stock price will fall and then buy stock at a lower price, effectively generating a handsome profit.

Another profile with the name Katie Jones, which supposedly mentioned working at the Center for Strategic and International Studies, was found to be a deepfake created with the mala fide intention of spying.

Detecting Textual Deepfakes

Researchers from the Allen Institute for Artificial Intelligence have developed a software tool called Grover to detect synthetic content floating online. Researchers claim that this software is able to detect deepfake-written essays 92% of the time. Grover works on a test set compiled from Common Crawl, an open-source web archive and crawler. Similarly, a team of scientists from Harvard and the MIT-IBM Watson laboratory have come together to design Giant Language Model Test Room, a web tool that seeks to discern whether the text inputted is generated by AI.

Deepfake Video

Making fake photos and videos is the main arsenal of deepfakes. It is the most used form of deepfake, given that we are living in the ubiquitous world of social media, wherein pictures and videos elucidate events and stories better than plain text.

Modern video-generating AI is more capable, and perhaps more dangerous, than its natural language counterpart. Seoul-based tech company Hyperconnect recently developed a tool called MarioNETte that can generate deepfake videos of historical figures, celebrities, and politicians. This is done through facial reenactment by another person, whose facial expressions are then superimposed on the targeted personality whose deepfake is to be created.

How Deepfake Video Is Produced?

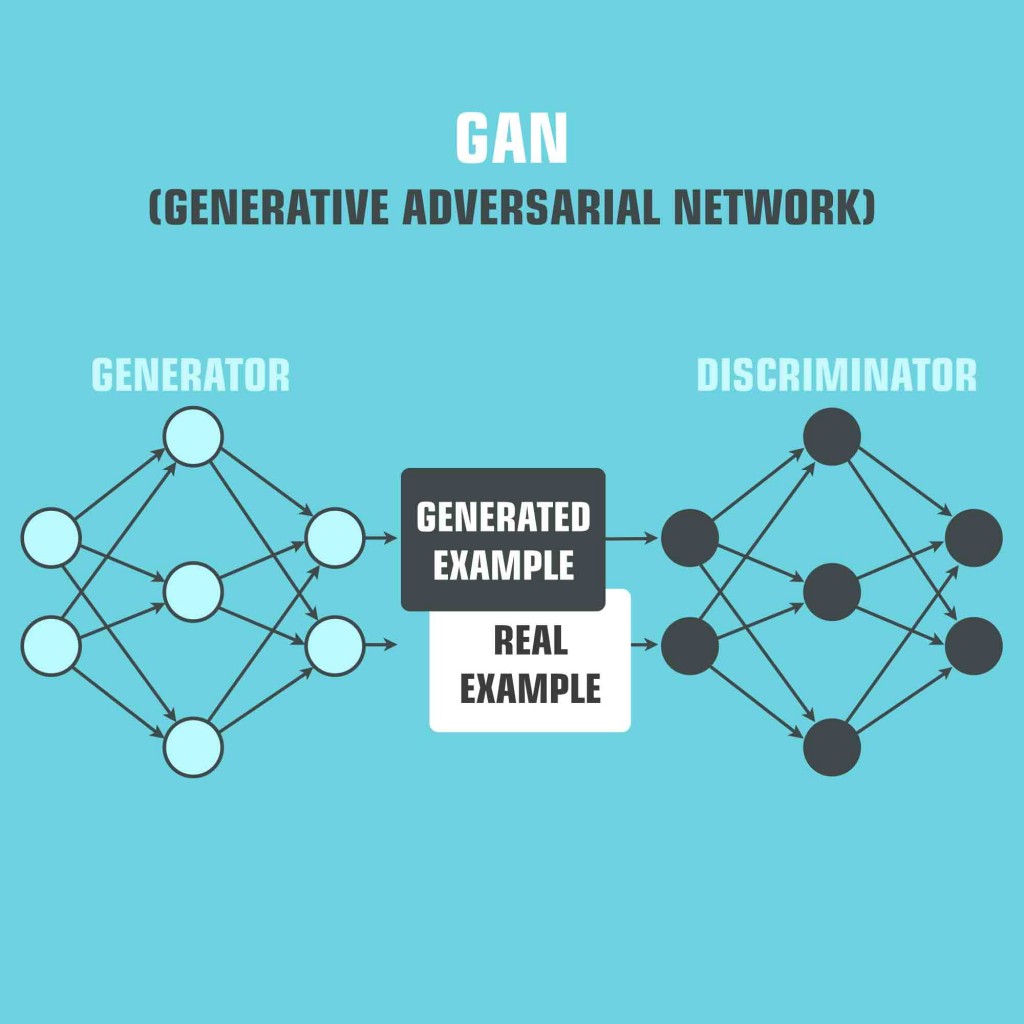

This video trickery employs a technique called generative adversarial network (GAN). GAN is a part of a machine learning branch called neural networks. These networks are designed to emulate the neuronal processes of the human brain. Programmers can train neural networks to recognize or manipulate a specific task.

In GAN used for deepfake generation, two neural networks are pitted against each other to generate a realistic output. The purpose of doing so is to ensure that the deepfakes are created to look as real as possible. The essence of GAN lies in the rivalry between the two neural networks. In GAN, the picture forger and the forgery detector repeatedly attempt to outsmart one another. Both the neural networks are trained using the same data set.

The first net is called the generator, whose job it is to generate a forged image using noise vectors (a list of random numbers) that look as realistic as possible. The second net, called the discriminator, determines the veracity of the generated images. It compares the forged image generated by the generator with the genuine images in the data set to determine which images are real and which are fake. On the basis of those results, the generator varies the parameter for generating images. This cycle goes on until the discriminator fails to ascertain that a generated image is bogus, which is then used in the final output. This is why deepfakes look so eerily real.

Detecting Deepfake Videos

Forensic experts across the globe are toiling hard to come up with ways and tools to identify deepfakes, as they are becoming more and more convincing every day.

For example, consider this deepfake demonstration video of Obama released by Buzzfeed in 2018, which stupefied viewers across the globe. You can check it out here:

As machine-learning tools are reaching the masses, it has become much easier to create convincing fake videos that could be used to disseminate propaganda-driven news or to simply harass a targeted individual.

The US Defense Department (DARPA) has released a tool for detecting deepfakes called Media Forensics. Originally, the program was developed to automate existing forensic tools, but with the rise of deepfakes, they have used AI to counter AI-driven deepfakes. Let’s see how it works.

The resultant video generated using deepfake technically has discernible differences in the way the video’s metadata is distributed, as compared to the original one. These differences are referred to as glimpses in matrix, which is what DARPA’s deepfake detection tool tries to leverage when detecting deepfake media.

Siwei Lyu, professor from the computer science department at the State University of New York, has noted that faces created using deepfake technology seldom blink. Even if they do, it seems unnatural. He posits that this is because most of the deepfake-driven videos are trained using still images. Still, photographs of a person are generally taken when their eyes are open. Besides eye blinking, other data points on facial movements, such as when they raise upper lip while conversing, how they shake their heads etc. can also provide clues as to whether the streamed video is fake.

Deepfake Audio

The power of artificial intelligence and neural networks isn’t just limited to text, pictures, and video. They can clone a person’s voice with the same ease. All that is required is a data set of the audio recording of a person whose voice needs to be emulated. Deepfake algorithms will learn from that data set and becomes empowered to recreate the prosody of a targeted person’s speech.

Commercial software is being released in the market like Lyrebird and Deep Voice, wherein you need to speak only a few sentences before the AI has grown accustomed to your voice and intonation. As you feed in more audio of yourself, this software becomes powerful enough to clone your voice. After feeding in the dataset of your own audio samples, you can just give a sentence or a paragraph and this deepfake software will narrate the text in your voice!

Detecting Deepfake Audio

Right now, there are not many dedicated deepfake audio tools available, but developers and cybersecurity companies are working in this domain to come up with better protective solutions in this regard.

For example, last year, developers at tech startup Resemble developed an open-source tool called Resemblyzer for the detection of deepfake audio clips. Resemblyzer uses advanced machine-learning algorithms for deriving computation representations of voice samples to predict whether they are real or fake. Whenever a user submits an audio file for evaluation, it generates a mathematical representation summarizing the unique characteristics of the submitted voice sample. Through this conversion, it becomes possible for the machine to detect whether the voice is real or artificially produced by deepfake tools.

The Road Ahead With Deepfakes

An investigation done by Deeptrace labs last year found that over 14,000 deepfake videos are lurking online. They have also noted a jump of 84% in their production in a span of just seven months. Interestingly, more than 90% of deepfake videos are pornographic material, wherein famous women are face-swapped in porn.

As deepfake is getting serious traction, it is posing a serious problem of intruding not just on the privacy, but also the dignity of individuals. Ironically, to counter AI-powered deepfakes, artificial intelligence itself is being used. Although a ‘good’ AI is helping to identify deepfakes, this detection system relies highly upon the dataset it consumes for training. This means they can work well to detect deepfake videos of celebrities, as a vast amount of data is available about them. But to detect the deepfake of a person who has a low profile would be challenging for such detection systems.

Social media tech giants are working on deepfake detection systems as well. Facebook recently announced that it is working on an automated system to identify deepfake content on its platform and weed it out. On similar lines, Twitter proposed flagging deepfakes and eliminating them if they’re found to be provocative.

Although we acknowledge and appreciate efforts by these tech companies, only time will tell how successful they are at keeping malicious deepfakes at bay!

References (click to expand)

- (2019) Deep fakes – an emerging risk to individuals and societies alike. Tilburg University

- Solaiman, I., Brundage, M., Clark, J., Askell, A., Herbert-Voss, A., Wu, J., … Wang, J. (2019). Release Strategies and the Social Impacts of Language Models (Version 2). arXiv.

- Politically linked deepfake LinkedIn profile sparks spy fears .... The Register

- Computer Science and Telecommunications Board, Intelligence Community Studies Board, Division on Engineering and Physical Sciences, & National Academies of Sciences, Engineering, and Medicine. (2019). Implications of Artificial Intelligence for Cybersecurity. (A. Johnson & E. Grumbling, Eds.), []. National Academies Press.

- CorentinJ/Real-Time-Voice-Cloning - GitHub. GitHub, Inc.