Table of Contents (click to expand)

Infrared waves are associated with heat because they have enough energy to excite electrons, thereby lifting them to higher energy levels. This translates to rotational and vibrational energy, leading to the haphazard jiggling of molecules. It is this jiggling of molecules that elevates body temperatures and signifies the presence of heat, which we equate with warmth.

The most common application of infrared radiation I can think of is a television remote, which communicates with a television by encoding information in an infrared wave. If these waves imparted “heat”, standing between the two devices wouldn’t bring nearly as much joy as your annoying sibling seems to derive from it. In fact, the smile on their faces is exceedingly more radiant than the infrared “light” your remote emits.

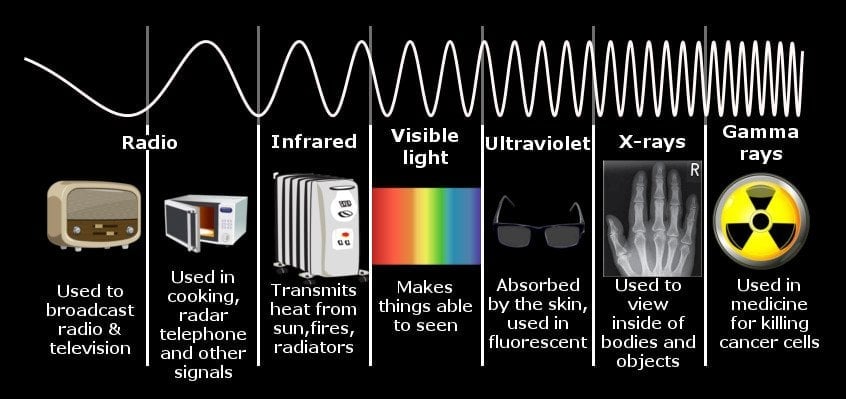

An infrared wave, like X-rays or visible light, is a form of electromagnetic radiation. Its range lies between microwaves and visible light. Its wavelength starts from the end of microwaves at 1mm and ends at the start of visible red at 700nm.

As the wavelength of a wave decreases, its energy increases. Thus, if gamma rays produced by supernova explosions or X-rays assailing your body at your nearest hospital have the lowest wavelengths and, consequently, the highest energies, doesn’t that imply that they should be more often equated with “heat”?

To answer this question, we must first brush up on the concepts that formulate the relationship between heated objects and electromagnetic waves. In other words, we must understand the phenomenon of Black Body radiation.

Recommended Video for you:

Black Body Radiation

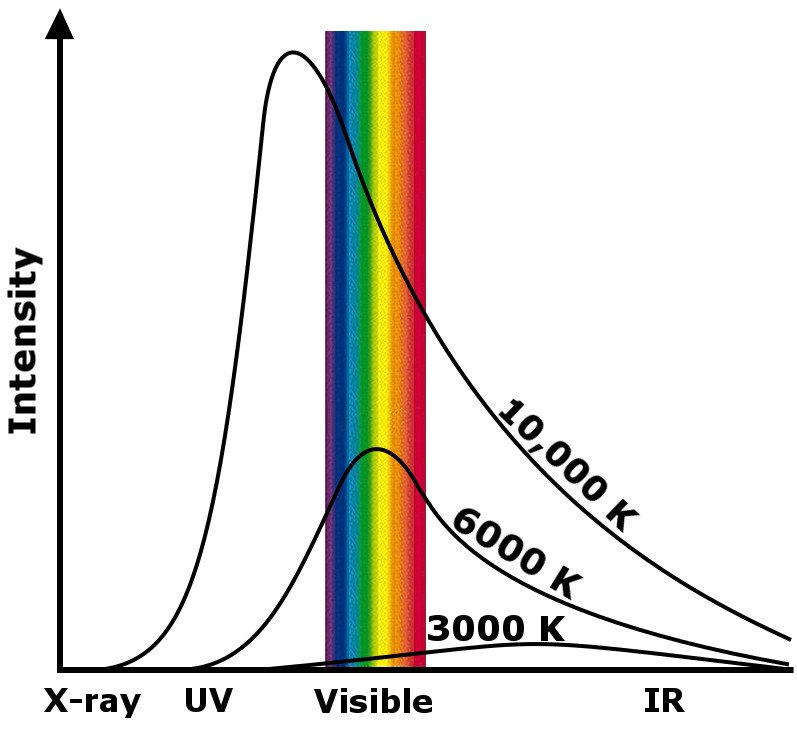

All objects with a temperature above absolute zero (0K or -273.15 Celsius) emit energy in the form of electromagnetic radiation. A “black” body is therefore an ideal body of matter that absorbs every speck of energy, reflecting absolutely nothing.

That first sentence is very important. It says that every object emits some amount of electromagnetic radiation. The energy of the packet of photons carrying this radiation is proportional to the temperature of the body emitting it. The energy is given by hc/λ, where ‘h’ is Planck’s constant, ‘c’ is the speed of light, and ‘λ’ is the wavelength of the EM wave. This explains why neutron stars emit gamma rays. However, it also implies that, technically, even a cold slab of ice produces “heat”.

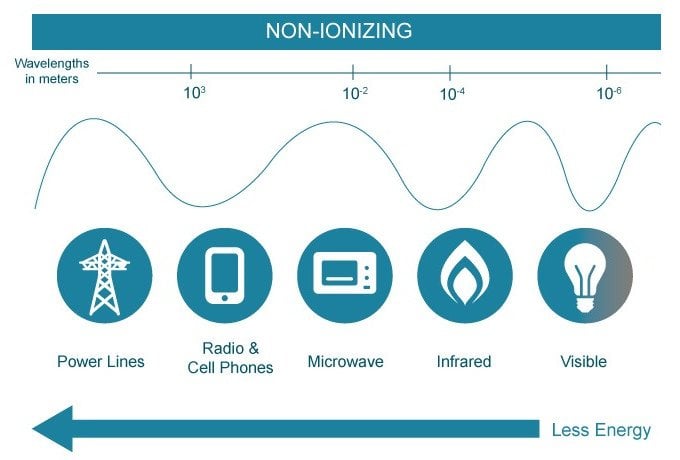

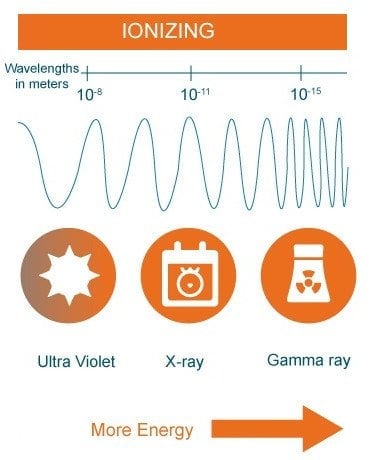

The entire spectrum of wavelengths given off by heated objects can be divided into two types of radiation: Ionizing and non-ionizing radiation.

Ionizing Radiation

Ionizing radiation is radiation that has enough energy to remove electrons from atoms, thereby ionizing them. X-rays and gamma rays, and the lowest wavelength UV rays as well, are so energetic that their interactions with matter provide the impression that they behave as particles, rather than waves. Because their wavelengths are infinitesimally small, they cannot effectively interact with molecules on the skin’s surface and instead interact with entities of a comparable size.

Their collision with an electron will brutally knock it off an atom! Ionization radiation isn’t harmful in the sense that they might cause immense warmth and inflict severe burns, but they do tend to penetrate much deeper and damage tissues beneath our skin. The damage doesn’t end there… because they ionize atoms, they tend to disrupt entire cellular structures, such as DNA molecules and chromosomes, which interferes with the intricate framework of genetics and causes unwanted mutations.

Although widely used in power plants and hospitals, ionizing radiation has adopted a nefarious reputation for causing cancer and a number of other fatal diseases. Ionizing radiation isn’t visible to the human eye and must be detected using specialized devices, such as a Geiger counter.

Non-Ionizing Radiation

This type of electromagnetic radiation does not have enough energy to ionize an atom or molecule, meaning that it is unable to completely remove an electron from its configuration. Their comparatively larger size only allows them to interact with slightly larger molecules, rather than subatomic constituents. They only have enough energy to excite electrons, thereby lifting them to higher energy levels.

These electromagnetic waves only operate at the level of the surface of our skin and, in the case of UV light, on tissues just beneath the surface. The excitement of electrons translates to rotational and vibrational energy, leading to the haphazard jiggling of molecules. It is this jiggling of molecules that elevates body temperatures and signifies the presence of heat, which we equate with warmth.

Looking at the spectrum, one may realize that microwaves transmit the lowest amount of heat, while visible and UV light transmit the highest. This is partly true. UV light, when it comes in contact with your skin, does inflict burns. However, most of the UV light from the Sun is absorbed by the atmosphere and re-emitted as visible or infrared light.

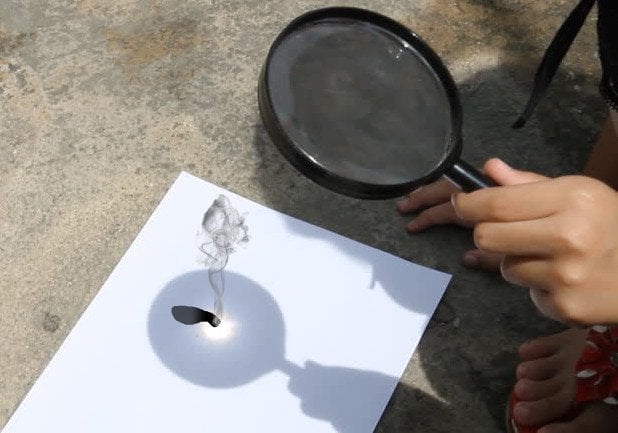

Also, the reason why visible light doesn’t burn as much as you’d expect is that it is scattered or dispersed by the atoms and molecules in the atmosphere, as well as the lower layers of the troposphere. Its incinerating nature becomes noticeable when magnified or converged using a magnifying glass on a thin piece of paper.

The importance of convergence is equally consequential for microwaves and radio waves. Our ovens would be incapable of heating a cold and hardened slice of pizza if the photons weren’t so closely crammed together. Remember, their heating capability was first observed by Percy Spencer when his Popsicle melted in his pocket while standing beside a magnetron. Because radio waves aren’t stuffed tight when modulated, the thousands of them passing through you every day do not inflict any burns.

Any burning entity, such as a blazing lump of coal, emits a variety of electromagnetic radiation, including visible, UV and even X-ray in minuscule traces, but most notably, they emit infrared light. This is why non-ionizing radiation is often called thermal radiation. At lower temperatures, thermal radiation might be restricted to infrared light, but at elevated temperatures, the radiation extends into many hues of infrared, visible and UV, depending on the body’s temperature.

Similar to X-rays and gamma rays, infrared cannot be detected with the naked eye. If it could be, you would see a glimmering, almost hallucinatory shade of red around every object. However, scientists have developed special cameras to detect these forms of radiation, which are given out by every object, even at moderate or nominal temperatures, be it the invisible rays emanating from my remote or from a cube of ice.

Some animals, such as snakes, have “pits” that detect infrared waves and allow them to “see” prey through bores and expertly hunt at night! Just like the tentacle-faced alien in the movie Predator.

The fact that we only associate “heat” with infrared waves is because we’re accustomed to sources that are only capable of generating non-ionizing radiation — we’re highly unlikely to encounter a neutron star on our planet. The most common sources of heat we find around us are the Sun, fire, our warm bodies or bulbs — sources that emit radiation in the range of infrared and visible.

This poverty of energy sources has limited the definition of heat in physics to an object that only transmits warmth, but the truth is, all frequencies will produce heat when absorbed.